Been a minute, eh?

I recently had one of those nagging little side-quests pop up that I couldn’t ignore. It involved building a simple test harness for network connectivity in Azure, where two VMs would be dynamically stood up and connectivity tests run from within each VM using native, common testing commands.

The point, from my own standpoint, wasn’t to build some groundbreaking piece of automation but rather to prove out the concept and walk through an example of taking a simple task and slowly iterating on it.

The Environment

To get this going, I wanted two peered VNETs. I built one in West US and one in East US. Both are /24’s with a single /27 subnet. In a more practical application, this would be deployed in a hub/spoke environment where things like firewalls, routing rules, and even NSG’s could interfere with traffic.

Also, because I don’t want any of my code to store sensitive things like passwords, I have a Key Vault I use for pulling passwords.

First step – VMs

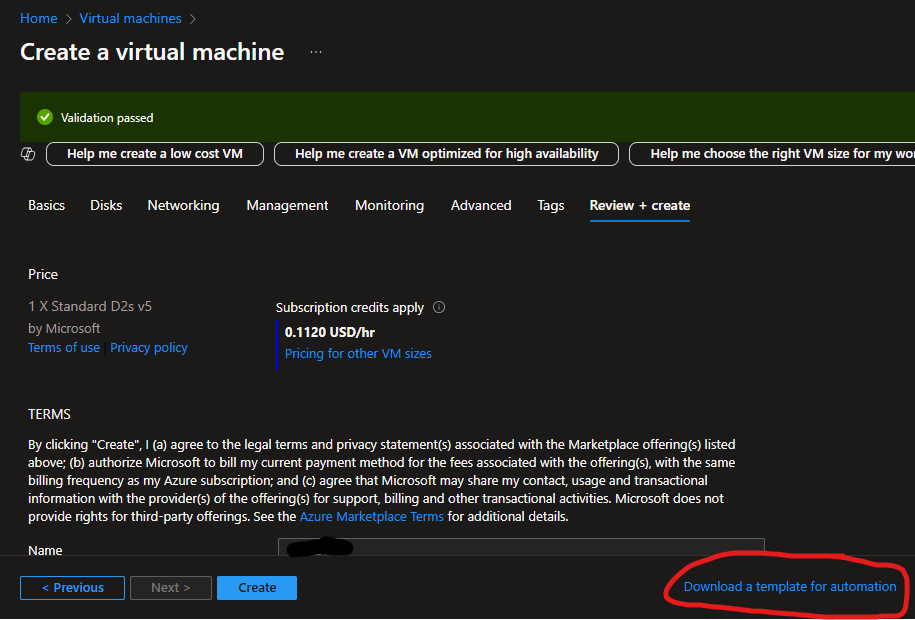

If I’m going to test connectivity between two networks, I suppose I need source and destination VMs. Building VMs through the portal is pretty straight forward, but it can also be used as a learning opportunity for taking an ‘as code’ approach. When clicking through the portal to build a VM, on the final “review and create” step you can click on “Download a template for automation” as shown below:

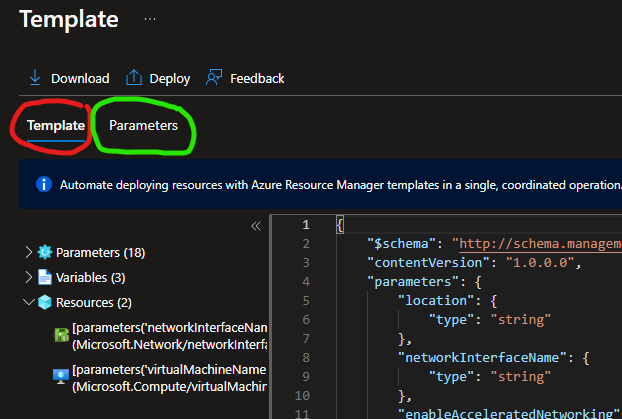

This takes you to a page where you can download two files which, together, can be used to build this same VM using PowerShell (or the Azure CLI, but I’m sticking with PowerShell).

The exact details of the contents of these two files are out of the scope of this post. Plenty has been written on ARM Templates and their usage, though it’s important to note that the Parameters file has all of the inputs and customizations to the VM you’ve selected.

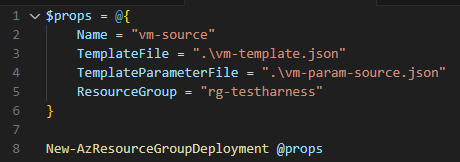

For my purposes, I took everything in the template and copied it to a file I called vm-template.json and everything in the parameter tab to a file called vm-param-source.json. Then to build this VM, I simply initiate the following bit of code:

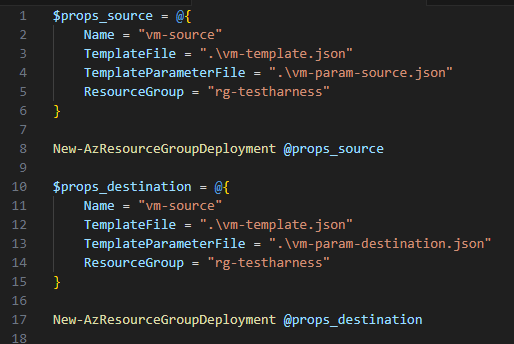

It’s not exciting, but it works. And I now have everything I need to also build a second machine. To do that, I made a copy of vm-param-source.json and called it vm-param-destination.json, customized the values of that JSON (which are the parameters of that second VM; things like name, location, network, subnet, size, etc.) and voila:

Running Remote Commands – The Tests

With code to build VMs taken care of, the next step was thinking how I would conduct the tests. I’m thinking simple tests – things like ping, trace route, and checking for TCP/80 or TCP/443 using nc. With using a Linux-based VM, I have all the native tools I need. But how to run them…

My first thought was SSH, which would need Bastion, and then I’d have to script a way to pull credentials or SSH keys or something. But that’s overly complicated – Azure is merely a hypervisor, and any good hypervisor will have a way to talk to a VM’s guest through tools / agent software. Turns out Azure is no different, and it has the Invoke-AzVMRunCommand cmdlet.

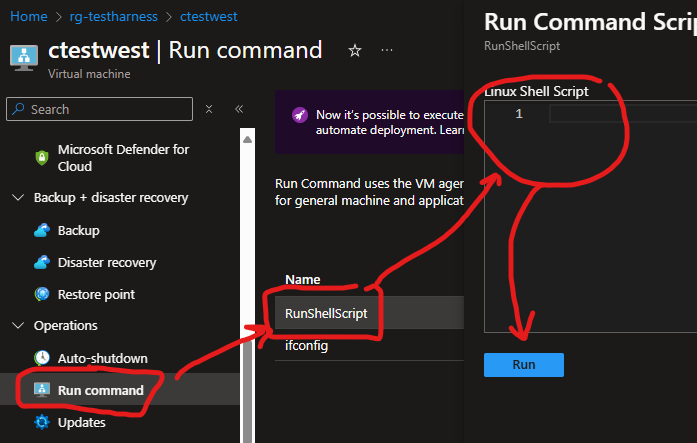

There’s actually a way through the portal to do this as well:

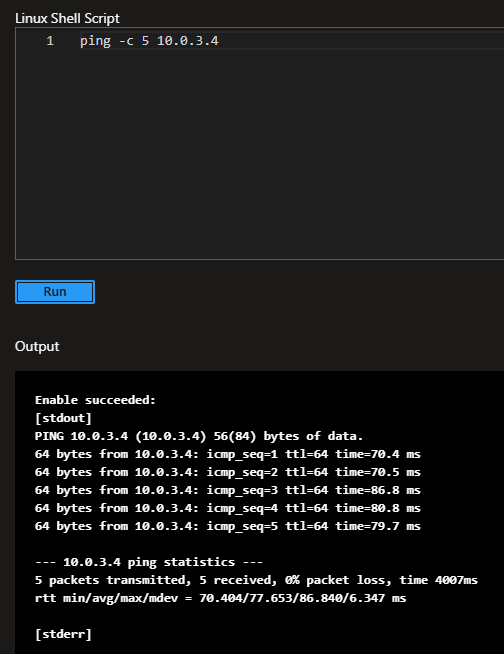

An example of what that looks like can be found below, where I ran a ping test from my source VM to destination VM.

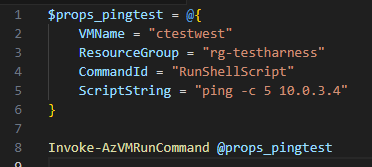

Here is what this looks like when done as code:

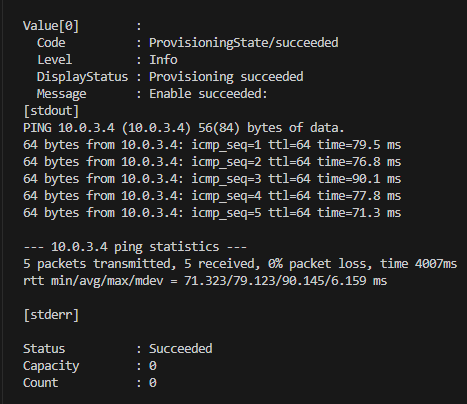

The value of the “CommandId” parameter is found from the Portal and is a built-in option (seen circled in red in the image earlier). The result of running the above looks like this:

A successful test, and I now have a way to issue remote commands to both my source and destination test VMs without using SSH passwords or keys. This pattern will allow me to run these tests from both the source and destination VMs, ensuring connectivity in both directions.

From Walking to Running

If manually building VMs through the portal, then logging into each of them and running tests is the crawl phase, then everything I’ve done to this point is going from crawling to walking. So where can we go from here? Well, I’m still tinkering, but there are numerous opportunities.

Parameterizing Inputs

The first thing I’m looking at doing is asking for inputs, namely subscription, resource group, virtual network and subnet. I’ll be asking these for both the source and destination VMs. I don’t care what the VM names are, as destroying them when the testing steps are done will just be good hygiene. Knowing that ARM Parameter Files are merely JSON files, we can dynamically build them after asking for user input.

Cleaning Up the Outputs

As you can see above, the output includes a lot more than is really needed, although Azure does provide section headers – [stdout] and [stderr]. With some fancy string manipulation, we can extract the status from the end, and maybe save just the contents of [stdout] to some fancier output location. Which brings me to the next item…

Storing the Output

If I didn’t want to think about the output, it could be sent to a storage account and never be looked at again. If I wanted to maybe do something smarter with it, I could send to a Log Analytics Workspace or even a CosmosDB.

The Coup de Grace – Pipeline Initiation

A pipeline can be an extremely useful tool in orchestration something like this, and the final step in the crawl -> walk -> run path. To be able to fire off a pipeline, feeding some inputs, and have it build two VMs, conduct tests, store the results somewhere, and clean up the resources? That would be pretty slick, and that’s where I’d be taking this little side quest if it materialized into more of a main quest for me…