I’ve been involved in a project to deploy Azure’s vWAN, and we ran into some interesting design challenges around routing. This was mostly due to the technology being fairly new to everyone involved, and so we didn’t have a lot of deep technical expertise we could really lean on. I spent some time going down the rabbit hole to try and gain and understanding of what we were experiencing and how to “route” around it. This is a summary of some learnings and tinkering I’ve done.

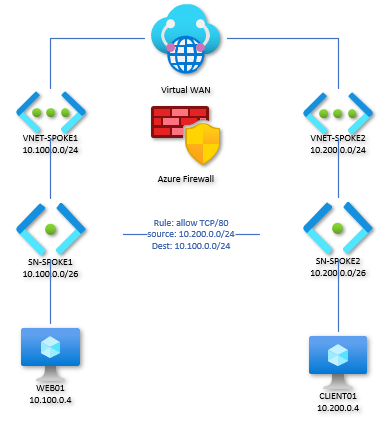

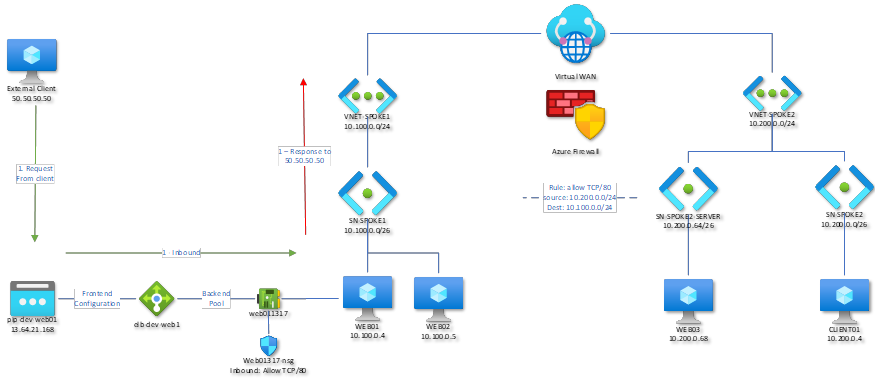

Let’s start at the beginning with my lab, shown above. This depicts a hub-and-spoke network leveraging Azure Firewall in the hub, with two spokes and a VM in each. Server WEB01 is a simple Windows Server running a static “Hello, I’m WEB01” web page on TCP/80. Nothing fancy. A single firewall rule was required to allow TCP/80 traffic to flow between CLIENT01 and WEB01.

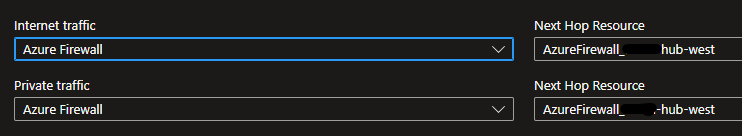

Within the vWAN Hub, we’ve enabled what’s called “Route Intent” policies. Microsoft has a very good, and lengthy, document around this, and this is where things began to get interesting for us. At its core, if configure, it’s a way to ensure that any virtual network that’s peered into the vWAN Hub will have routes established.

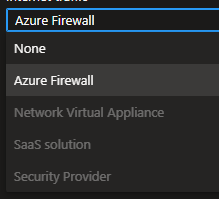

The above example shows that for both Internet-bound and Private-bound traffic, we’re configuring Route Intent to target our Azure Firewall, with the next hop being that resource. This ensures that all spoke-to-spoke traffic goes through the hub, and anything seeking to go out to the Internet will also go through the hub. Your options for the route policies are, today, fairly limited:

On the project I’m working on, instead of the native Azure Firewall we were using one of the supported SaaS solutions deployed into the hub. Choice of firewall doesn’t (really) matter for this discussion, but our choice of did make a difference when it came time to try and route the SaaS-based firewall solution to its management VM. That’s….another story.

So back to my lab…

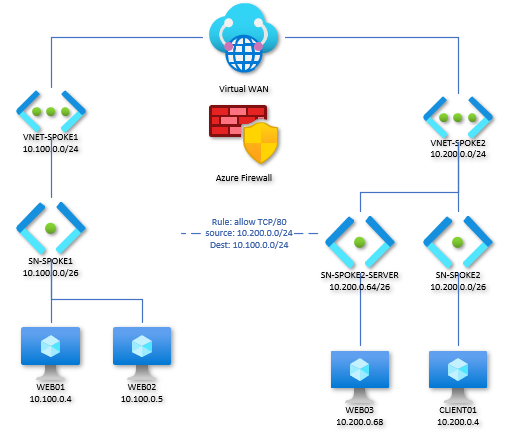

Expanding things out a bit, I’ve added a second web server within Spoke 1’s VNET and added a third web server on a new subnet within Spoke 2. At this point, the routing intent settings are doing precisely what we expect them to do. Each peered VNET has its next hop for all traffic set to the hub; CLIENT01 is able to reach all three web servers with just the one firewall rule.

But what happens if we want to access WEB01 from the outside? In our stubbornness, we didn’t want to go down the path of UDR’s, and we thought that the Route Intent settings were required. We have a proven track record of creating a mess with UDR’s. Lack of skill resulted in sins of our forefathers and poor design choices in the past (hence the need for the project itself).

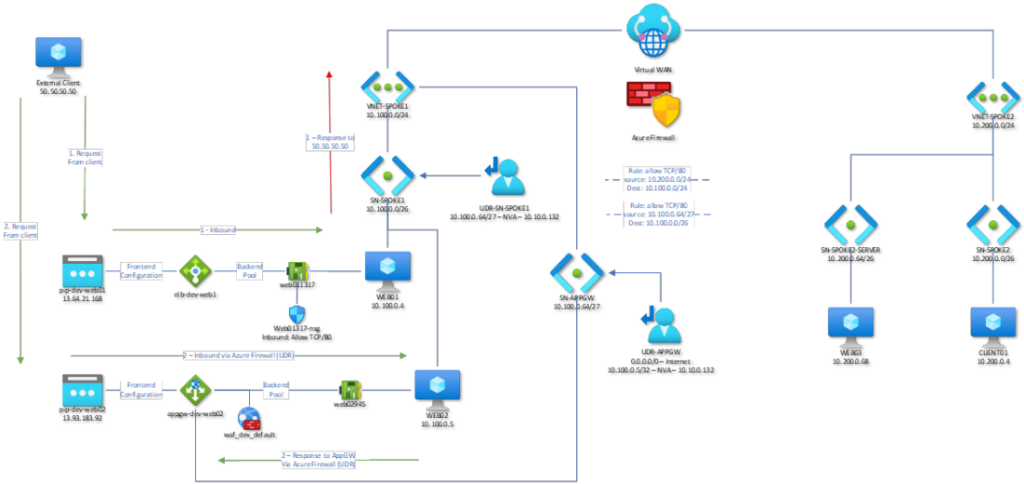

As just one example of how this can break, we see an External Load Balancer in front of WEB01. As traffic comes in from the external client, passes through the ELB and hits the web server, the web server is going to respond with its next hop: the hub. This is because the External Load Balancer does not have any private IP on the virtual network.

This could be solved with a UDR attached to subnet SN-SPOKE1 above, but….that would affect all workloads in that subnet, which isn’t really ideal. It also takes the firewall, and any packet inspection, out of the equation. Cybersecurity would wag a finger at this, so that’s out.

As with most Azure services, especially fairly complex ones, there’s a lot of documentation out there on vWAN – you just need to find the right one for what you’re trying to get done. I stumbled across this article talking about Application Gateway and Backend Pools with vWAN, resulting in a working model below:

For this example, we start by creating a new subnet dedicated to the application gateway. Attached to that subnet is a UDR which will override the default route for 0.0.0.0/0 and set its next hop as Internet. An additional route for the server subnet is created, with next hop as the private IP of the Azure Firewall. Lastly, a firewall rule was needed to allow TCP/80 from the App Gateway subnet to the WEB02 subnet.

Inbound traffic will come in from the frontend IP of the App Gateway, go to the firewall for inspection, and come back to server WEB02. A second UDR was needed on the WEB02 subnet to tell the server that, to get to the App Gateway subnet you must first go through the firewall.

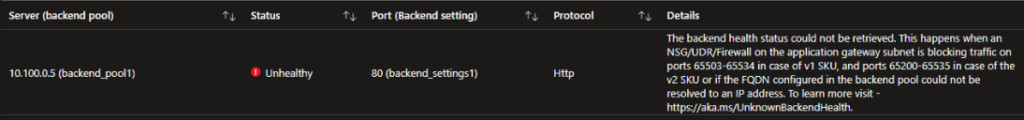

I was a bit unsure as to why the UDR was needed on the WEB02 subnet, but I can assure you it absolutely is required. Without it, the Application Gateway doesn’t seem to have a path to the server (or to be more specific, the server isn’t responding correctly to the App Gateway). This was shown with the backend pool health error:

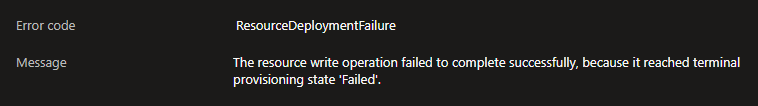

Another fun fact I learned through this process. If you don’t build the UDR and attach it to the Application Gateway subnet first, you won’t be able to build the Application Gateway. Ask me how I know:

The error message was truly bizarre as well:

During deployment, the App Gateway is looking to go out to Microsoft resources and it had no path to do so. I initially discovered this when I disabled Route Intent for Internet on the vWAN Hub and redeployed the App Gateway (successfully).

In the end, Route Intent is an interesting setting, but it’s really “all or nothing”. We’ve learned that we can’t escape UDR’s, but as our project unfolds, we’ll be more thoughtful about how UDR’s are created and used. With luck, as we move into the next phase of our project (“landing zones”) we’ll be able to build a robust and scalable set of designs that application teams will find easy to use for their own work. Getting here was fun and frustration. Mostly fun – I leave this phase of the project smarter, and with a better understanding of vWAN routing concepts.