I was once asked in an interview years ago the following question: “User calls and says their printer isn’t working. How would you troubleshoot this?”

It’s a silly question at face value, but I believe it was intended to be vague in order to have a conversation around the troubleshooting thought process. Off the top of my head it’s easy to come up with questions intended to collect data that can help narrow down the issue. Local printer or network printer? One user or all users? When was the last time you could print to it? Does it have paper? Is it on? Etc. etc.

One of the reasons I wanted to start this blog was to document my own troubleshooting processes and perhaps give others tools necessary to start asking questions and digging deeper into issues. Especially if I spent any time researching a topic to find a solution. Some people go one layer deep when trying to find a solution and then get lost. But just because it’s dark, there’s always another path to take – you just need to find it.

I’ll use a specific example and go a few layers deep to talk through how it was solved. I worked with a colleague to really flush out some of the solutions.

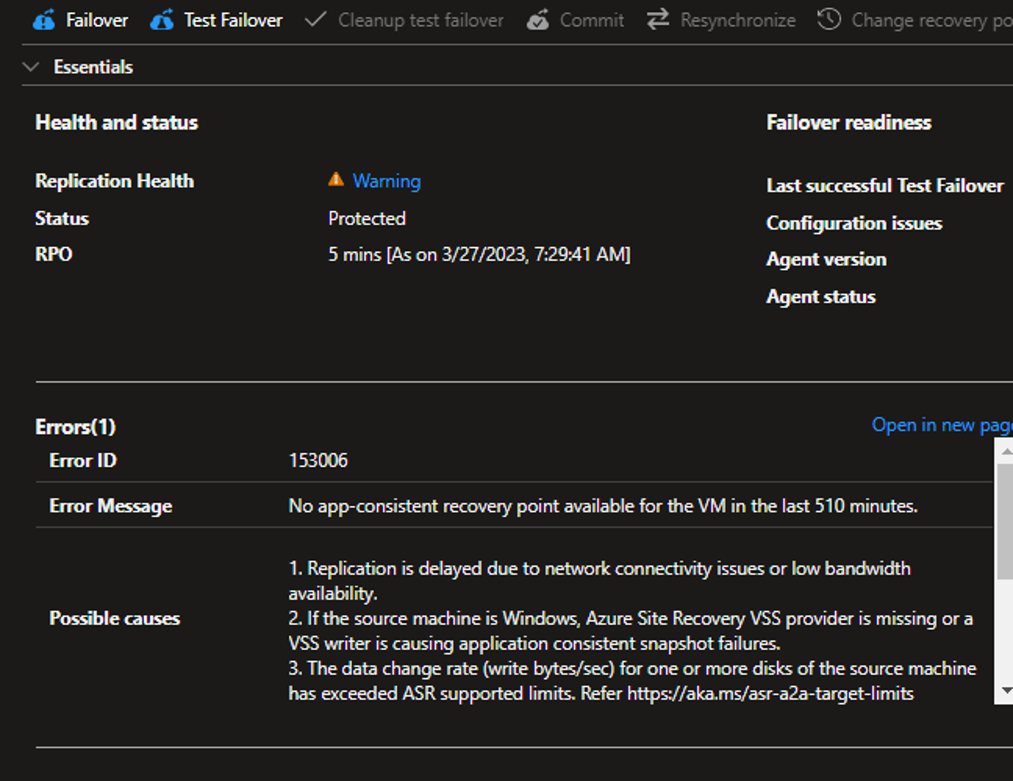

The example we’ll use relates to Azure Site Recovery being deployed to a couple hundred servers. Out of that batch, there are a large number of warnings – this is an example:

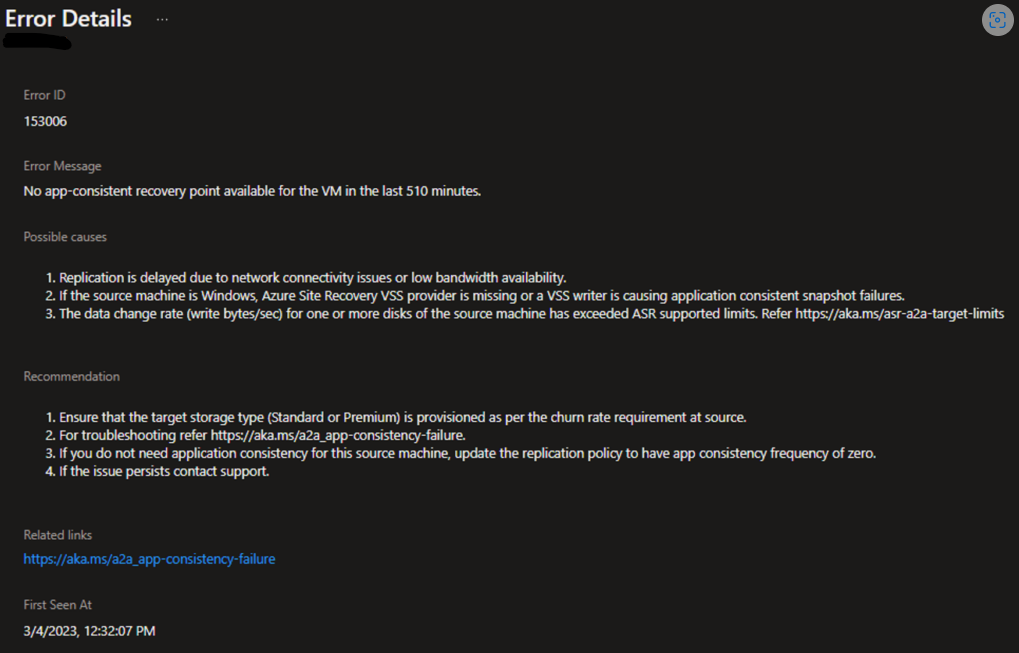

Azure let’s you drill down a little bit further and get more information on that specific error:

In this example, we have a server where replication is running successfully but there is no app-consistent recovery point. Microsoft gives us some possible causes and possible recommendations. So….how would you troubleshoot this?

Tier 1 – The Basics – “Is it plugged in?”

Troubleshooting is part art, part science. It’s a repeatable process – gather data, create hypothesis, act, check. In nearly all cases, there’s no obvious right or wrong answer for beginning the troubleshooting process. Some places may make more sense than others. For instance, I wouldn’t jump to restarting the server first. For issues with servers, restarting it is the one thing you want to avoid at all costs.

Who hasn’t seen this meme in their career?

Don’t. Reboot. Servers.

In this case, we did start with Azure Site Recovery. We disabled replication, re-enabled replication and waited to see what happened. There was no harm in that, didn’t require a reboot, was a fairly quick thing to do. We wanted to rule out the possibility that it was the ASR agent that was somehow not cooperating. But alas, same result.

This is the first layer down the rabbit hole where I’ll sometimes see people stop. The old joke “I’ve tried nothing and I’m out of ideas” comes to mind. But in troubleshooting, we need more data in order to make an informed hypothesis. For this situation we need to get onto the server and start looking around.

Tier 2 – “Data, data, data. I cannot make bricks without clay!”

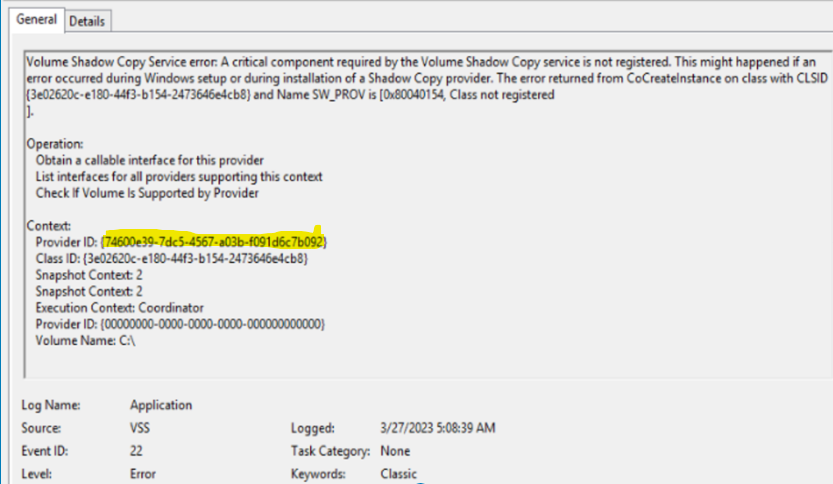

The most important thing when troubleshooting a problem is data. The more data you have around the symptoms, the environment, the scope, the scale, etc. the better. When troubleshooting a problem on a Windows server, the first place you should always look is the Event Log. In this instance, we’re looking for either Azure Site Recovery or VSS errors. And here we’ve found one:

I’m going to pause briefly here and talk about the Volume Shadow Copy Service (VSS). Third party applications use it to make snapshots for replication purposes or, more commonly, backup. Microsoft has some fantastic, and in depth, documentation that you should be aware of if you want to get a deeper understanding of how it works.

Overview of Processing a Backup Under VSS – Win32 apps | Microsoft Learn

Working left to right you have a Requestor that talks to Backup Components. The Backup Components talk to Writers, and the Writers talk to back-end Providers. In the error above we see that it speaks very specifically about a provider not having some components registered. This is where we roll our sleeves up.

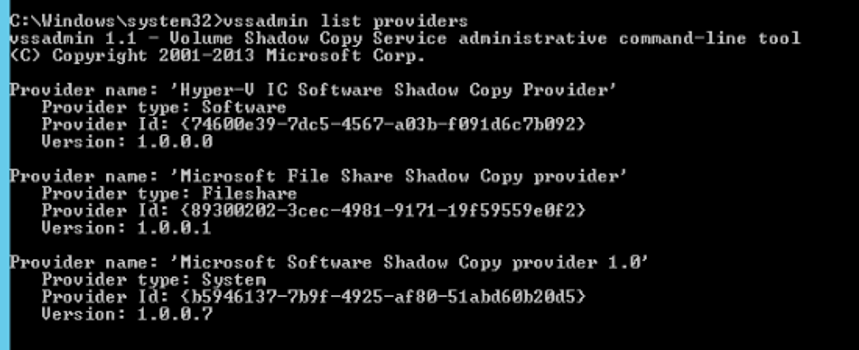

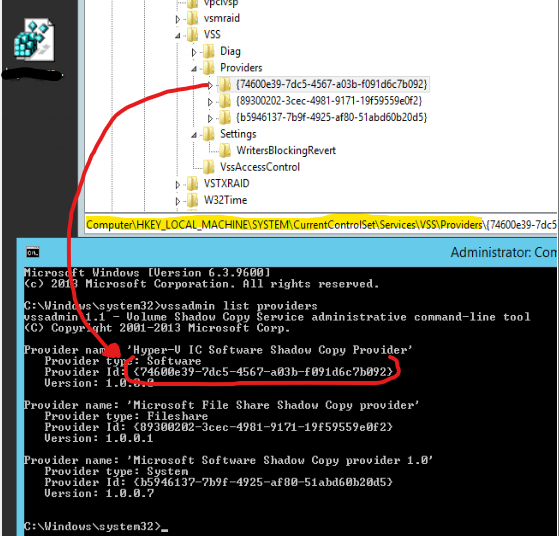

If you’re not familiar, Windows has a built-in tool called vssadmin which lets you collect some potentially useful information. By running vssadmin list providers on the server we get three items:

From doing some digging online, we find that the second and third providers are built-in, native. Microsoft File Share Shadow Copy provider looks to have been introduced as part of an enhancement to Server 2012 R2 (according to this older Microsoft blog post).

In this case, I’m left with the Hyper-V IC Software Shadow Copy Provider. As of the writing of this post, I’ve no idea where this provider comes from. I’ve seen this provider on multiple servers with issues, and have verified that no other backup product has been installed. But the provider ID in the output above matches the provider ID in the Event Log error and we know isn’t used. The next step in this was to remove that provider and test. To do that, we need to venture into the registry.

The registry can be a scary place. It’s very easy to cause significant damage to your system if you don’t know what you’re looking at. For VSS providers, luckily it’s pretty straightforward. You can see the location above under the HKEY_LOCAL_MACHINE hive. What I did in this case was right click the provider with the matching GUID and export it (saving it to the desktop). Then removed the key. Next, run net stop vss && net start vss in order to restart the VSS Service and test.

In a few cases, this worked. In others, it didn’t. In those that didn’t, we need to keep collecting data.

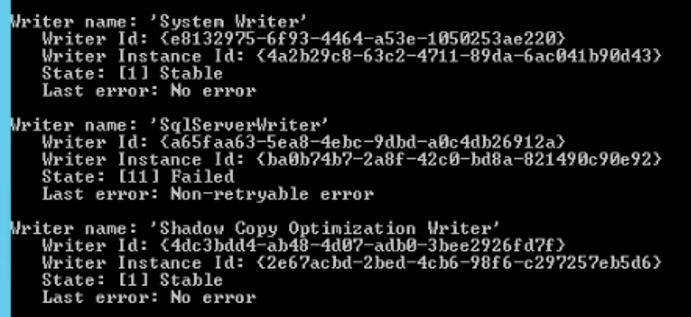

In one oddball case, we found VSS errors relating to a specific SQL Writer. If you run vssadmin list writers you’ll get a list of all the registered VSS Writers on the system:

This shows three writers (there are usually about 10 or so in a default installation). The middle writer is clearly a problem here, as the State shows as “Failed” and Last Error shows as “Non-retryable error”. As it turns out, this has an application installed called “VSS Writer for Server 2016” installed – so this is almost certainly interfering with the operation of VSS.

But let’s continue down another tunnel in the rabbit hole…

Tier 3 – “Knee deep in the dead”

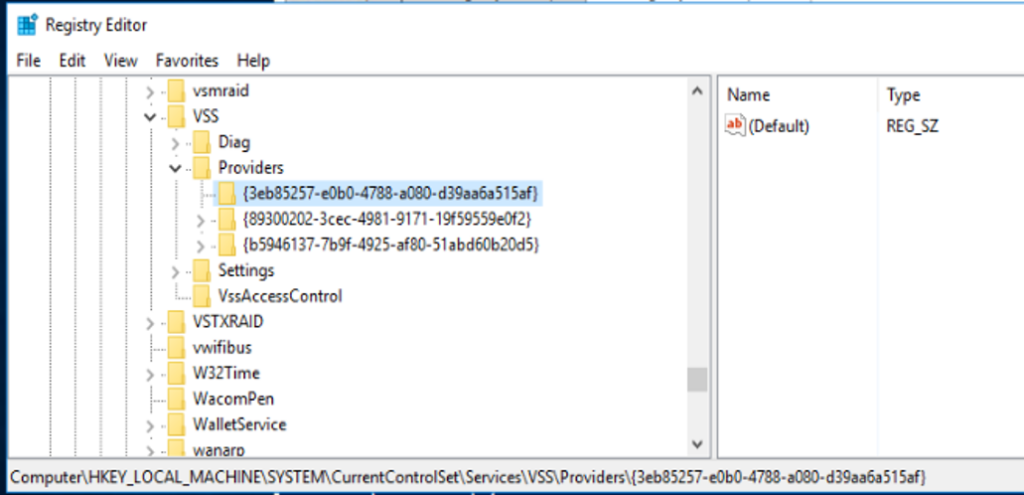

When poking around, you may come across something that just doesn’t make sense. In a number of other servers we investigated, we found an interesting registry key in the VSS providers which was completely empty:

This key, 3eb85257-e0b0-4788-a080-d39aa6a515af, was seen on a number of machines that included vague VSS errors in the event logs. The only thing we could guess was that an older version of the Azure Site Recovery agent had at some point been removed and some artifacts were left. But this was speculation. On one test server, we found that removing that empty key and restarting the VSS service cleared up some VSS errors, and actually caused ASR app-consistent backups to be taken properly.

To see precisely how many servers were affected, I wrote the following script. The output was a CSV file that I could then filter and get the exact list of servers.

$allServers = Import-Csv $pathToServerList

$array = @()

foreach ($server in $allServers) {

try {

$result = Invoke-Command $server.Name {Get-ChildItem -Path 'HKLM:\SYSTEM\CurrentControlSet\Services\VSS\Providers'}

} catch {

}

$array += $result

}

foreach ($item in $array) {

"$($item.Name),$($item.property),$($item.PSComputerName)" | Out-File $pathToOutputFile -Append

}

You will want to have an input and output file defined, but this gave me a list of all servers and all the providers. I could then sort / filter on the empty one we identified above, and then with the following code snippet was able to remove the key and restart the VSS service cleanly.

$allServers = Import-Csv $pathToServerList

foreach ($server in $allServers) {

Try {

Invoke-Command $server.Name {

Remove-Item -Path 'HKLM:\SYSTEM\CurrentControlSet\Services\VSS\Providers\{3eb85257-e0b0-4788-a080-d39aa6a515af}' -Confirm:$false

} -ErrorAction SilentlyContinue

} catch {

}

}

Don’t judge my code, remember, I’m #BattleFaction.

Tier 4 – “Where we’re going, we don’t need roads…”

There’s some seriously deep holes, and one of the things about troubleshooting in general is that you want to be careful not to follow any one hole too deeply – you’ll just end up chasing nothing that truly matters in solving the issue at hand. Case in point…

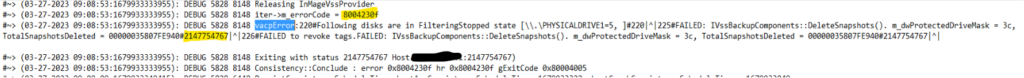

In troubleshooting the above, I found that there’s a log used for Azure Site Recovery located at C:\Program Files (x86)\Microsoft Azure Site Recovery\agent\Application Data\ApplicationPolicyLogs\vacp.log where I found the following error.

In the log you see a couple of highlighted errors. In doing online searches for this error, I landed on protocol definition for MS-FSRVP (File Server Remote VSS Protocol):

[MS-FSRVP]: File Server Remote VSS Protocol | Microsoft Learn

This was an interesting reference document, but not exactly helpful in the troubleshooting pursuits. It can be easy to follow the trail and get lost, so at some point in the research efforts it’s helpful to realize whether you’ve gone too far. You may learn something (which is always a plus), but it may not be useful in solving the immediate problem at hand.

End of the trail

If you’ve made it this far, kudos to you. Troubleshooting is a skill that I honestly see being in short supply. Error messages will pop up and people will have no clue what to do next. It’s a skill that everyone should have. Be curious. Figure out how to solve a problem, and endeavor to learn new things along the way. It will only make you a more valuable resource!

Nice post.

There are some solid processes you tease out here. Five Whys and Ishikawa Diagrams certainly help.

And there’s always deeper and deeper into the mud. The Windows Resource Kit has VSS diagnostics tools too.